Fractal Happy New Year 2025 from CIPARLABS!

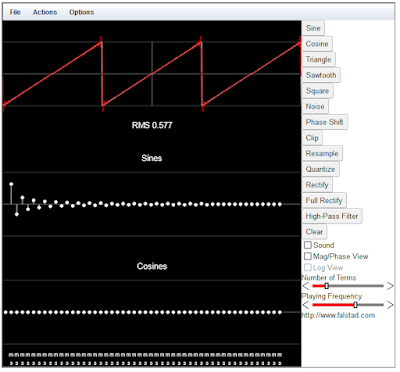

As we step into 2025, CIPARLABS reflects on a year of exceptional multidisciplinary research spanning artificial intelligence and neural networks, energy systems, healthcare, and complex systems theory. This year we wish you a happy "fractal" 2025 to underline our scientific approach to the problems we are going to solve and which concerns the science of complexity.

The synergy between AI and complexity science has unlocked innovative solutions to societal challenges. From revolutionizing energy grids to enhancing medical diagnostics, our work exemplifies how common frameworks can empower diverse fields. This post celebrates our achievements, highlighting the unity of disciplines and the endless possibilities of a collaborative future.

2024 Highlights: Research Achievements

1. Transformative Advances in Energy Management

- Battery Modeling for Renewable Energy Communities: A Thevenin-based equivalent circuit model optimized energy management strategies, balancing computational efficiency and accuracy in predicting battery performance.

- Energy Load Forecasting Breakthrough: Novel integration of second-derivative features into machine learning models like LSTM and XGBoost significantly improved predictions for peak energy demands, enhancing microgrid stability.

- Smart Grid Fault Detection: The Bilinear Logistic Regression Model enabled interpretable AI-driven fault detection, ensuring resilient energy infrastructures.

2. Innovations in Healthcare through Explainable AI

- Melanoma Diagnosis: Developed a custom CNN with feature injection, utilizing Grad-CAM, LRP, and SHAP methodologies to interpret deep learning predictions. This workflow sets a benchmark for explainability in computer-aided diagnostics.

- Text Classification in Healthcare Discussions: Conducted a comparative study of traditional and transformer-based models (BERT, GPT-4) to classify Italian-language healthcare-related social media discussions, combating misinformation effectively.

3. Exploring Human vs. Machine Intelligence

- Using complex systems theory and Large Language Models, we analyzed GPT-2’s language generation dynamics versus human-authored content. The study revealed distinct statistical properties, such as recurrence and multifractality, informing applications like fake news detection and authorship verification.

Future Directions: Looking Ahead to 2025

CIPARLABS aims to deepen its focus on explainable AI for critical applications in energy, healthcare, and language modeling. We are committed to expanding our interdisciplinary efforts, incorporating insights from philosophy, complex systems, and AI ethics. Future work will include:

- Integrating advanced multimodal AI systems in healthcare.

- Scaling energy solutions to diverse legislative frameworks worldwide.

- Further bridging AI and human cognition to enhance ethical and transparent AI systems.

List of Published Papers (2024)

An Online Hierarchical Energy Management System for Renewable Energy Communities

Submitted to: IEEE Transactions on Sustainable Energy

Improving Prediction Performances by Integrating Second Derivative in Microgrids Energy Load Forecasting

Published in: IEEE IJCNN 2024, IEEE

From Bag-of-Words to Transformers: A Comparative Study for Text Classification in Healthcare Discussions in Social Media

Published in: IEEE Transactions on Emerging Topics in Computational Intelligence

An Extended Battery Equivalent Circuit Model for an Energy Community Real-Time EMS

Published in: IEEE IJCNN 2024

Modeling Failures in Smart Grids by a Bilinear Logistic Regression Approach

Published in: Neural Networks, Elsevier

An XAI Approach to Melanoma Diagnosis: Explaining the Output of Convolutional Neural Networks with Feature Injection

Published in: Information, MDPI

Human Versus Machine Intelligence: Assessing Natural Language Generation Models Through Complex Systems Theory

Published in: IEEE Transactions on Pattern Analysis and Machine Intelligence, IEEE

Many other are in process!